Here in this example I am going to schedule a HCM Extract using Flow Schedule Fast Formula.

I have tried to dynamically schedule the HCM Extract using Common Lookup and Value Set.

As of now, for each and every HCM Extract I have created seperate Flow Schedule Fast Formulas.

I am still trying to use single Flow Schedule Fast Formula to schedule multiple HCM Extracts.

The issue why I am not able to use single Flow Schedule Fast Formula for multiple HCM Extracts is,

while submitting the HCM Extracts I am not able to pass the HCM Extract Name as parameter in the Flow Schedule Fast Formula.

If anyone is able to do it, please share the details in the comment section.

Now the question arise, what we have made dynamic here. You can change the schedule of the Extract any time as per Business requirement from the

Common Lookup section. Everytime you don't need to change the Fast Formula to change the Schedule timing.

This Flow Schedule Fast Formula is still in development and I am trying to add multiple functionality to this.

As of now please consider this as a sample Flow Schedule Fast Formula.

Step 1 : Create a Common Lookup. Here we will pass the HCM Extract name and the schedule details of HCM Extract.

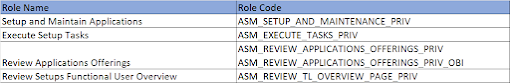

a> Navigate to Setup and Maintenance --> Tasks --> Manage Common Lookups

b> Create a new Common Lookup. Provide the details as below :

1> Lookup Type : SM_HCM_FLOW_SCHEDULE_CL (an example)

2> Meaning : Common Lookup for Flow Schedule Details

3> Description : Common Lookup for Flow Schedule Details

4> Module : Global Human Resources

5> Lookup Code : SM_HCM_ABC_INTEGRATION_V1 (passing the HCM Extract name here; an example

6> Display Sequence : 1 (pass accordingly)

7> Enabled : mark it checked

8> Start Date : 01/01/1901 (pass accordingly)

9> End Date : 12/31/4712 (pass accordingly)

10> Meaning : Daily1 (Here this value I am using to check how frequently the process will run)

11> Description : Hourly (Here this value I am using in what mode the process will run)

12> Tag : 4 (Here this value I am using in what time span this process will run ; example : in every 4 hours)

c> Save the Common Lookup.

Note : Here in the Common Lookup, I will pass the details of the Schedule, how the HCM Extract is going to be run.

Depending on this configuration, the Flow Schedule Fast Formula should be taken care.

Step 2 : Create a Table type Value Set Code. This Value Set will pass the Scheduling details of HCM Extract in the Flow Schedule Fast formula

to set the next Scheduling date.

a> Navigate to Setup and Maintenance --> Tasks --> Manage Value Sets

b> Create a new Value Set. Provide the details as below :

1> Value Set Code : SM_HCM_FLOW_SCHEDULE_VS (an example)

2> Description : Value Set for ABC Integration

3> Module : Global Human Resources

4> Validation Type : Table

5> Value Data Type : Character

6> From Clause : FND_LOOKUP_VALUES_TL FLVT,FND_LOOKUP_VALUES_B FLVB

7> Value Column Name : FLVB.LOOKUP_TYPE||'*'||FLVB.LOOKUP_CODE||'*'||FLVT.MEANING||'*'||FLVT.DESCRIPTION||'*'||FLVB.TAG

8> Value Column Type : VARCHAR2

9> ID Column Name : FLVB.LOOKUP_TYPE||'*'||FLVB.LOOKUP_CODE||'*'||FLVT.MEANING||'*'||FLVT.DESCRIPTION||'*'||FLVB.TAG

10> ID Column Type : VARCHAR2

11> WHERE Clause : FLVT.LOOKUP_TYPE = FLVB.LOOKUP_TYPE

AND FLVT.LOOKUP_CODE = FLVB.LOOKUP_CODE

AND FLVT.VIEW_APPLICATION_ID = FLVB.VIEW_APPLICATION_ID

AND FLVB.ENABLED_FLAG = 'Y'

AND FLVT.LANGUAGE = USERENV('LANG')

AND FLVT.LOOKUP_TYPE = :{PARAMETER.LOOKUP_TYPE}

AND FLVB.LOOKUP_CODE LIKE :{PARAMETER.LOOKUP_CODE}

c> Save the Value Set.

Step 3 :

Sample Flow Schedule Fast Formula :(The full Fast Formula I have written here, there may be some typo. Please correct accordingly if any. Please consider this as an example)

/****************************************************************************************************************************************

Formula Name : Schedule HCM Extract using Flow Schedule Fast Formula

Formula Type : Flow Schedule

Formula Description : Flow Schedule Formula to return a date time from Saturday 6 pm to Friday 6 pm (as an example)

Returns : NEXT_SCHEDULED_DATE

Formula Results : NEXT_SCHEDULED_DATE; This will be a date time value with yyyy-MM-dd HH:mm:ss format

Effective Date : 01/01/1901

Name Date Version Description

----------------------- ------------ --------- ------------------

Sourav Mazumder 01/01/2020 1.0 Initial Version

****************************************************************************************************************************************/

INPUTS ARE SUBMISSION_DATE(DATE), SCHEDULED_DATE(DATE)

SM_GET_HCM_ABC_UPD_SCHED_DETAILS = ' '

SM_VS_LOOKUP_TYPE = 'SM_HCM_FLOW_SCHEDULE_CL'

SM_VS_LOOKUP_CODE = 'SM_HCM_ABC_INTEGRATION_%'

SM_GET_HCM_ABC_UPD_SCHED_DETAILS = GET_VALUE_SET('SM_HCM_FLOW_SCHEDULE_VS','|=LOOKUP_TYPE='''||SM_VS_LOOKUP_TYPE||''''||'|LOOKUP_CODE='''||SM_VS_LOOKUP_CODE||'''')

SM_SCHEDULE_TYPE = SUBSTR(SM_GET_HCM_ABC_UPD_SCHED_DETAILS,(INSTR(SM_GET_HCM_ABC_UPD_SCHED_DETAILS,'*',1,2)+1),((INSTR(SM_GET_HCM_ABC_UPD_SCHED_DETAILS,'*',1,3))-((INSTR(SM_GET_HCM_ABC_UPD_SCHED_DETAILS,'*',1,2)+1))))

SM_SCHEDULE_TIME_TYPE = SUBSTR(SM_GET_HCM_ABC_UPD_SCHED_DETAILS,(INSTR(SM_GET_HCM_ABC_UPD_SCHED_DETAILS,'*',1,3)+1),((INSTR(SM_GET_HCM_ABC_UPD_SCHED_DETAILS,'*',1,4))-((INSTR(SM_GET_HCM_ABC_UPD_SCHED_DETAILS,'*',1,3)+1))))

SM_SCHEDULE_TIME = SUBSTR(SM_GET_HCM_ABC_UPD_SCHED_DETAILS,(INSTR(SM_GET_HCM_ABC_UPD_SCHED_DETAILS,'*',1,4)+1),((LENGTH(SM_GET_HCM_ABC_UPD_SCHED_DETAILS)+1)-((INSTR(SM_GET_HCM_ABC_UPD_SCHED_DETAILS,'*',1,4)+1))))

SM_PRINT_VAR_0 = ESS_LOG_WRITE('SM_GET_HCM_ABC_UPD_SCHED_DETAILS :' + SM_GET_HCM_ABC_UPD_SCHED_DETAILS)

SM_PRINT_VAR_1 = ESS_LOG_WRITE('SM_SCHEDULE_TYPE :' + SM_SCHEDULE_TYPE + '-' + TO_CHAR(INSTR(SM_GET_HCM_ABC_UPD_SCHED_DETAILS,'*',1,2)+1))

SM_PRINT_VAR_2 = ESS_LOG_WRITE('SM_SCHEDULE_TIME_TYPE :' + SM_SCHEDULE_TIME_TYPE + '-' + TO_CHAR(INSTR(SM_GET_HCM_ABC_UPD_SCHED_DETAILS,'*',1,3)+1))

SM_PRINT_VAR_3 = ESS_LOG_WRITE('SM_SCHEDULE_TIME :' + SM_SCHEDULE_TIME + '-' + TO_CHAR(INSTR(SM_GET_HCM_ABC_UPD_SCHED_DETAILS,'*',1,4)+1))

SM_GET_SUBMISSION_DATE = TO_CHAR(SUBMISSION_DATE)

SM_GET_SCHEDULED_DATE = TO_CHAR(SCHEDULED_DATE)

SM_PRINT_SUBMISSION_DATE = ESS_LOG_WRITE('SM_GET_SUBMISSION_DATE :' + SM_GET_SUBMISSION_DATE)

SM_PRINT_SCHEDULED_DATE = ESS_LOG_WRITE('SM_GET_SCHEDULED_DATE :' + SM_GET_SCHEDULED_DATE)

SM_GET_DAY_END = TO_NUMBER(SUBSTR(TO_CHAR(SCHEDULED_DATE,'YYYY-MM-DD HH24:mm:ss'),12,2))

SM_GET_DAY_NUMBER = TO_CHAR(SCHEDULED_DATE,'D')

SM_PRINT_DAY_END = ESS_LOG_WRITE('SM_GET_DAY_END :' + TO_CHAR(SM_GET_DAY_END))

SM_PRINT_DAY_NUMBER = ESS_LOG_WRITE('SM_GET_DAY_NUMBER :' + SM_GET_DAY_NUMBER)

SM_GET_SUNDAY_NUMBER = TO_CHAR(TO_DATE('2020-01-05','YYYY-MM-DD'),'D')

SM_GET_MONDAY_NUMBER = TO_CHAR(TO_DATE('2020-01-06','YYYY-MM-DD'),'D')

SM_GET_TUESDAY_NUMBER = TO_CHAR(TO_DATE('2020-01-07','YYYY-MM-DD'),'D')

SM_GET_WEDNESDAY_NUMBER = TO_CHAR(TO_DATE('2020-01-08','YYYY-MM-DD'),'D')

SM_GET_THURSDAY_NUMBER = TO_CHAR(TO_DATE('2020-01-09','YYYY-MM-DD'),'D')

SM_GET_FRIDAY_NUMBER = TO_CHAR(TO_DATE('2020-01-10','YYYY-MM-DD'),'D')

SM_GET_SATURDAY_NUMBER = TO_CHAR(TO_DATE('2020-01-11','YYYY-MM-DD'),'D')

IF UPPER(SM_SCHEDULE_TIME_TYPE) = 'HOURLY' THEN

NEW_SCHEDULED_DATE = ADD_DAYS(SCHEDULED_DATE,((1/24)*TO_NUMBER(SM_SCHEDULE_TIME)))

IF UPPER(SM_SCHEDULE_TIME_TYPE) = 'MINUTELY' THEN

NEW_SCHEDULED_DATE = ADD_DAYS(SCHEDULED_DATE,((1/24)*(1/60)*TO_NUMBER(SM_SCHEDULE_TIME)))

IF (SM_GET_DAY_NUMBER = SM_GET_FRIDAY_NUMBER AND SM_GET_DAY_END > 23) THEN

NEXT_SCHEDULED_DATE = ADD_DAYS(SCHEDULED_DATE,1)

ELSE

NEXT_SCHEDULED_DATE = NEW_SCHEDULED_DATE

SM_PRINT_NEXT_SCHEDULED_DATE = ESS_LOG_WRITE('NEXT_SCHEDULED_DATE :' + TO_CHAR(NEXT_SCHEDULED_DATE))

RETURN NEXT_SCHEDULED_DATE

/***************************************************************************************************************************************/

Step 4 : Now navigate to My Clients Group --> Data Exchange --> Submit Extract. Search the HCM Extract you want to submit using the Flow Schedule.

In the Schedule section click on the dropdown and change the value to "Using a schedule". Now in the Frequency pass the Flow Schedule Fast

Formula name. Put the Start Date when the HCM Extract should run for the first time. Pass the End date upto when the HCM Extract should

run using the Flow Schedule.